Surogate LLMOps Enterprise

Surogate OSS

Surogate Enterprise

Pretraining; full fine-tuning; LoRA / QLoRA

BF16, FP8, NVFP4, BnB; mixed-precision training

Multi-GPU; Multi-node (Ray-based)

Native C++/CUDA engine; kernel fusions; multi-threaded scheduler

Deterministic configs + predefined recipes

DDP efficiency (comm/compute overlap)

Optimizer options (e.g., 8-bit AdamW)

Dense + MoE model support

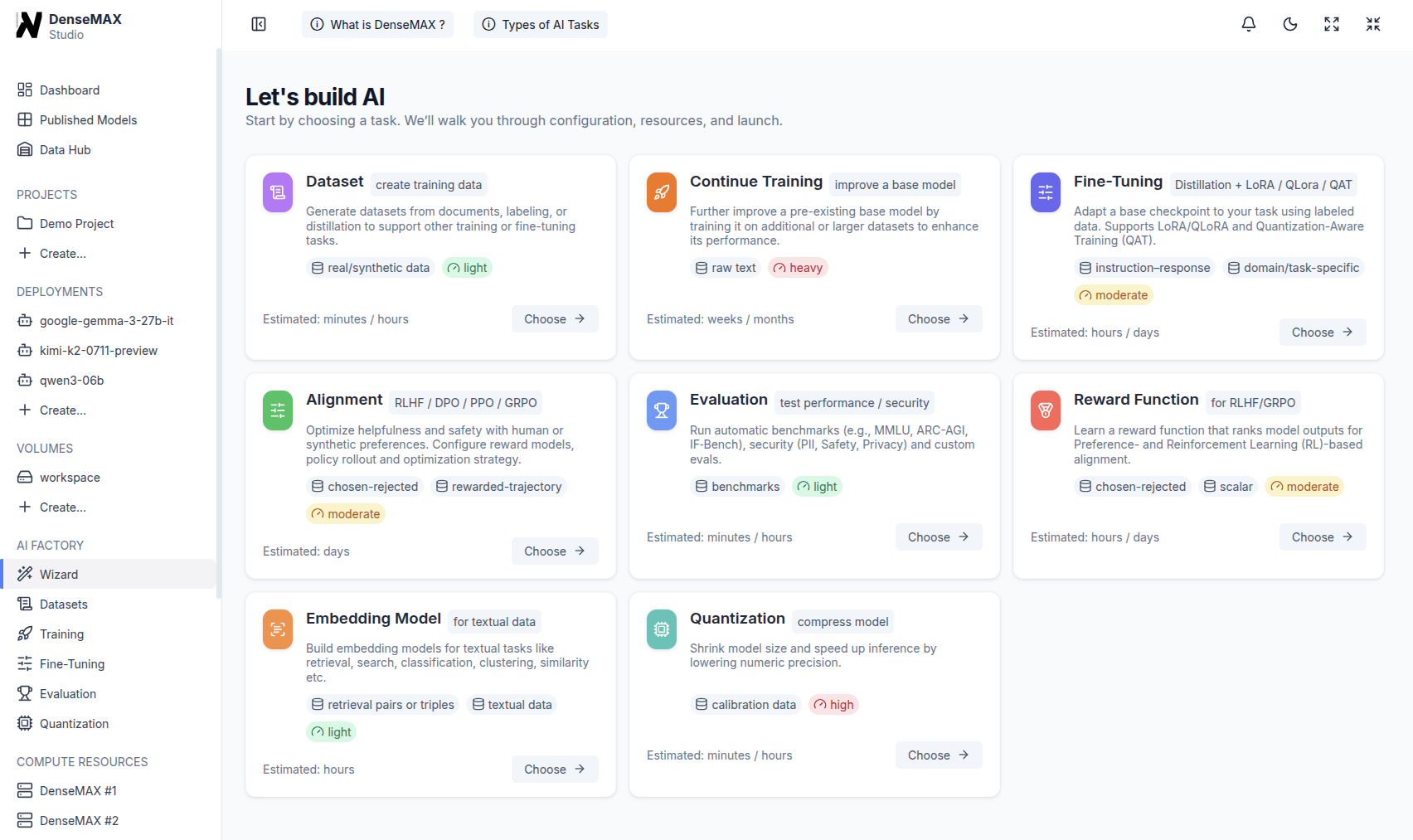

GUI workflows; no-code pretraining & fine-tuning; predefined recipes

Reinforcement fine-tuning; alignment: DPO / PPO / GRPO / GDP

Chinchilla scaling rules for pretraining

Data Hub with Git-like versioning

Advanced model serving (KV-aware cache routing, GPU sharding, replicas, disaggregated serving)

Model gateway (usage tracking & security)

Evaluation suite + red-teaming(bias/toxicity/leakage, etc.)

Synthetic data generation; embeddings training; reward function tooling

Workload/container isolation

Deploy on DenseMAX Appliance + public clouds

Adaptive Training (online hyperparameter adjustment to prevent drift/collapse)

Role-based access control (RBAC)

SOC2 compliance commitment

Dedicated CSM; SLAs / guaranteed support response times

Org-grade governance (promotion/approvals, stricter policy enforcement)

Multi-tenant controls / isolation for departments

Air-gapped + hardened deployment patterns

High availability (HA) options for serving + control plane

Backup/restore + disaster recovery (DR) procedures

Security hardening: encryption at rest + customer-managed keys (KMS/HSM)

Secrets management integration (Vault/KMS)

Supply-chain security: vulnerability scanning + SBOMs for images/artifacts

Lineage tracking across data → run → artifact → deployment